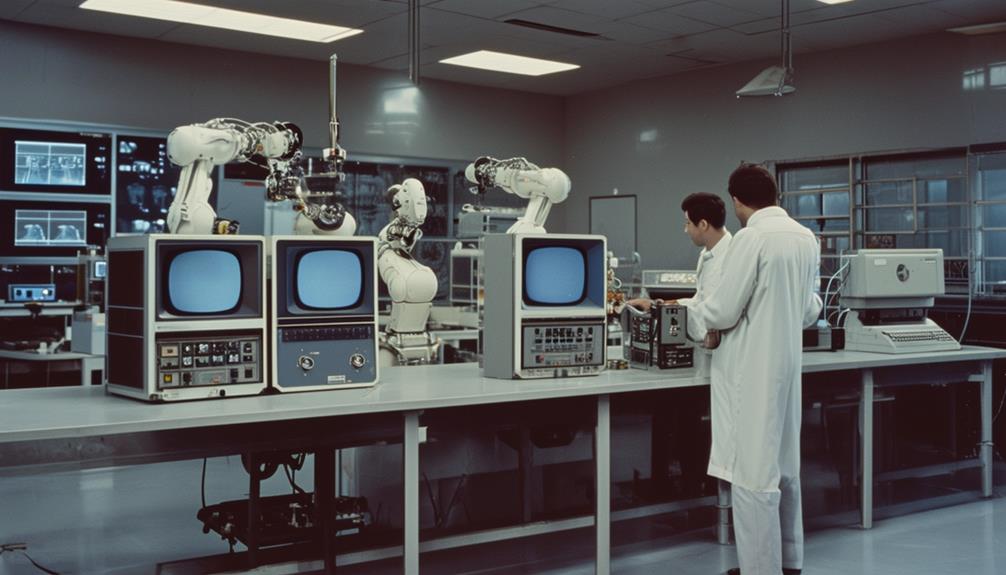

Perceptrons and the Birth of Neural Networks During the 1960S

Imagine the 1960s, a time when the idea of machines replicating human cognition was just beginning to take shape. At the forefront was Frank Rosenblatt, who introduced the perceptron—a pioneering model inspired by the workings of a single neuron. These early days were filled with both enthusiasm and skepticism, as perceptrons showed potential for learning from labeled data. However, they also faced significant limitations that influenced the trajectory of artificial intelligence. What were these constraints, and how did they shape the future of AI? Let's explore the journey from these early models to today's sophisticated neural networks.

Origins of Perceptrons

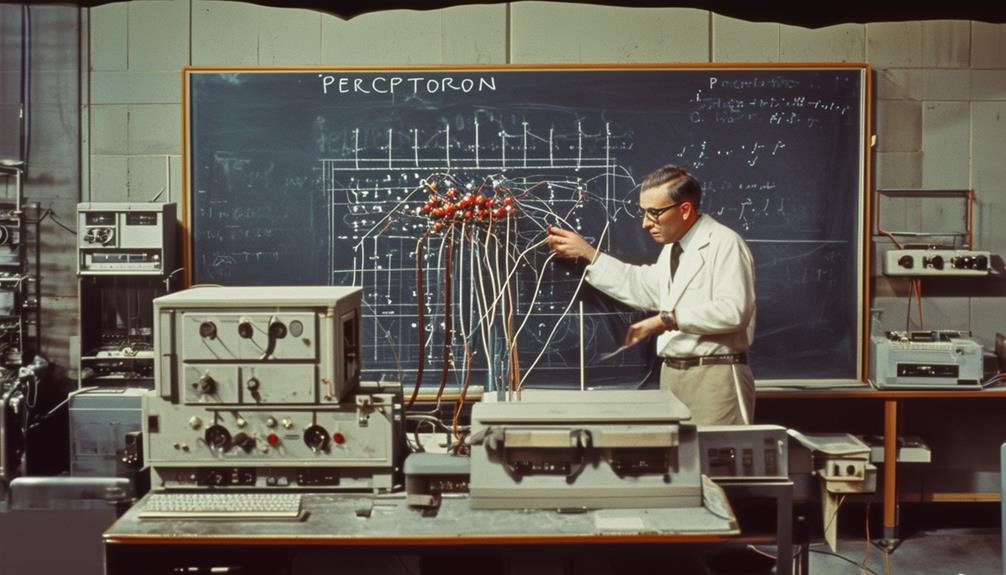

The origins of perceptrons trace back to the late 1950s when Frank Rosenblatt introduced them as the first trainable neural networks. Perceptrons were groundbreaking because they mimicked the functioning of a single neuron, capable of performing binary classification tasks. Rosenblatt's model built upon the foundational work of the McCulloch-Pitts neuron, which used a threshold activation function to determine output based on input signals.

A perceptron is designed to take in multiple input features and, by applying learned weights, classify the inputs into one of two categories. The process of learning the appropriate weights occurs through exposure to labeled training data, allowing the perceptron to adjust and refine its decision-making abilities over time.

The simplicity and effectiveness of perceptrons in handling binary classification marked a significant milestone in the development of neural networks. They demonstrated the feasibility of machines learning from experience and adjusting their behavior accordingly. Understanding the foundational role of perceptrons and Rosenblatt's contributions offers valuable insights into the evolution of modern neural networks.

Frank Rosenblatt's Contribution

Frank Rosenblatt's ingenuity in 1958 introduced the world to the perceptron, a landmark in neural network development. His creation was pioneering in demonstrating early AI capabilities by distinguishing patterns, a remarkable achievement at the time. Rosenblatt's work laid the groundwork for the evolution of modern neural networks and deep learning technologies.

Rosenblatt's research sparked significant discussions within the AI community, particularly with Marvin Minsky. These debates were crucial as they highlighted both the potential and limitations of perceptrons. While Rosenblatt championed the perceptron's capabilities, Minsky pointed out its constraints in handling more complex tasks. This dialogue was instrumental in steering AI research towards addressing these limitations and fostering advancements.

Today, Rosenblatt's legacy continues to influence AI. His foundational work in neural network development has led to sophisticated AI systems. As AI research progresses, Rosenblatt's perceptron remains a critical reference point, inspiring new generations of AI researchers and developers.

The Perceptron Model

The perceptron model is a foundational concept in the development of neural networks. It consists of a single layer of neurons that adjust their weights based on input features to produce binary outputs. Understanding its structure and learning algorithm provides insight into how early neural networks learned to classify simple patterns.

Basic Perceptron Structure

Imagine a single layer of input nodes, each connected to an output node, forming the basic structure of a perceptron. Introduced by Frank Rosenblatt in 1957, this simple yet powerful model became a cornerstone of neural networks in the 1960s. In perceptrons, each connection between input and output has an associated weight, which is adjusted during training to improve accuracy. The perceptron functions as a linear classifier, capable of recognizing and classifying simple patterns based on a linear decision boundary.

Due to its mathematical simplicity, the perceptron was ideal for early explorations into machine learning and neural networks. It operates by summing the weighted inputs and passing the result through an activation function to produce an output. This structure laid the groundwork for more complex neural network architectures used today.

To better understand the perceptron, consider its key components:

| Component | Description |

|---|---|

| Input Nodes | Receive input signals |

| Output Node | Produces the final classification |

| Associated Weight | Adjusts the influence of each input |

| Linear Classifier | Separates data into classes using a linear boundary |

Learning Algorithm Explained

Building on the basic structure of a perceptron, let's investigate how the perceptron learning algorithm adjusts weights to minimize classification errors. Introduced by Frank Rosenblatt in 1957, the perceptron is a binary linear classifier that makes predictions based on a linear combination of input features and a threshold function. The learning algorithm iterates through the training data, adjusting the weights of the connections between neurons to reduce classification errors.

Here's how the perceptron learning algorithm works:

- Initialization: Start with random weights.

- Prediction: Calculate the output by applying the threshold function to the linear combination of input features and weights.

- Update Rule: Adjust the weights if the prediction is incorrect. For each misclassified input, update the weights using the formula:

\[

ext{new weight} = ext{old weight} + ext{learning rate} imes (ext{expected output} - ext{predicted output}) imes ext{input}

\]

- Iteration: Repeat the process for multiple epochs or until classification errors are minimized.

Rosenblatt's model was limited to linearly separable data, but it laid the foundation for future advancements in neural network architectures and sparked significant interest in artificial intelligence research during the 1960s.

Learning Mechanisms

Early training algorithms for perceptrons played a crucial role in developing how these models learned from data. By comparing actual outputs with desired outcomes, perceptrons adjusted their weights to minimize classification errors. This foundational approach has influenced the development of both supervised and unsupervised learning methods, which are essential to the functionality of modern neural networks.

Early Training Algorithms

The perceptron's learning mechanism, which involved adjusting weights based on misclassified examples, laid the groundwork for how modern neural networks are trained. At its core, the perceptron aimed to minimize errors by iteratively updating its weights. Each training cycle involved presenting an input, calculating the output, and comparing it to the expected result. If the output was incorrect, the perceptron adjusted its weights to reduce the discrepancy. This process repeated iteratively until the errors were minimized.

Rosenblatt's perceptron algorithm fundamentally focused on:

- Weights: Adjusting these values to correct the classification.

- Errors: Identifying misclassifications to inform updates.

- Training: Iteratively presenting examples to refine accuracy.

- Update Mechanism: Modifying weights based on the difference between actual and expected outputs.

This iterative training approach is a cornerstone of modern neural networks. The perceptron's ability to learn from errors and continually update itself was pioneering for its time. These early training algorithms demonstrated that a machine could 'learn' from its mistakes, setting the stage for more complex neural networks that we rely on today. By iteratively refining weights, the perceptron ensured enhanced performance over time, embodying the essence of machine learning.

Supervised Vs. Unsupervised Learning

Understanding the distinction between supervised and unsupervised learning is crucial for determining the best approach to train your neural networks. Supervised learning involves training your model on labeled data, where each input is paired with the correct output. This method relies on a feedback loop to adjust the model's parameters, enabling accurate predictions. A clear objective and sufficient labeled data are essential for effective supervised training.

In contrast, unsupervised learning utilizes unlabeled data to identify patterns and structures within the dataset. This approach is particularly useful for tasks such as clustering, dimensionality reduction, and anomaly detection. Without explicit labels, the neural network discovers inherent groupings and relationships, making it adaptable to various applications where labeled data might be scarce.

Choosing between supervised and unsupervised learning depends on the nature of your data and the problem at hand. If you have a well-defined goal and ample labeled data, supervised learning is appropriate. Conversely, if you aim to explore unknown data structures or uncover hidden patterns, unsupervised learning offers the necessary flexibility. Both methods are vital tools in neural networks, each serving unique purposes in data analysis and pattern recognition.

Early Applications

In the 1960s, perceptrons began their evolution by addressing pattern recognition and noise elimination tasks, setting the stage for future advancements in neural networks. These early applications were pivotal in demonstrating the potential of machines to mimic certain aspects of human cognition.

Perceptrons were applied to:

- Image recognition: Early experiments focused on distinguishing basic shapes and patterns in images, laying the groundwork for more complex visual systems.

- Speech recognition: Initial attempts aimed at recognizing spoken words, marking pioneering steps towards understanding and processing human language.

- Classification tasks: Perceptrons were used to categorize data into different classes, a fundamental aspect of machine learning.

- Madaline system: The development of the Madaline (Multiple ADALINE) system represented a significant leap, handling more complex pattern recognition tasks than single-layer perceptrons.

These applications were essential in proving the practical viability of neural networks. The lessons learned and technologies developed during this period paved the way for the sophisticated advancements in neural networks we see today.

Limitations Discovered

Researchers in the 1960s quickly realized that perceptrons, due to their linear constraints, couldn't solve complex problems. A prominent issue was their inability to handle non-linearly separable data, exemplified by the XOR problem. Perceptrons, as linear networks, failed to create the necessary non-linear decision boundaries to distinguish between inputs in tasks like XOR, making their limitations glaringly obvious.

Marvin Minsky and Seymour Papert's influential 1969 book, 'Perceptrons,' brought these limitations to light. They meticulously analyzed the shortcomings of single-layer perceptrons, emphasizing that such networks couldn't solve problems requiring non-linear separability. Their critique underscored the urgent need for more complex neural network architectures capable of tackling real-world challenges.

The exposure of these limitations had significant ramifications. Interest and funding in AI research plummeted, ushering in a period known as the 'AI winter.' During this time, advancements in artificial intelligence stagnated due to skepticism and reduced investment. The limitations of perceptrons highlighted the necessity for innovation in neural network design, paving the way for future developments in multi-layered networks and deep learning.

Impact on AI Research

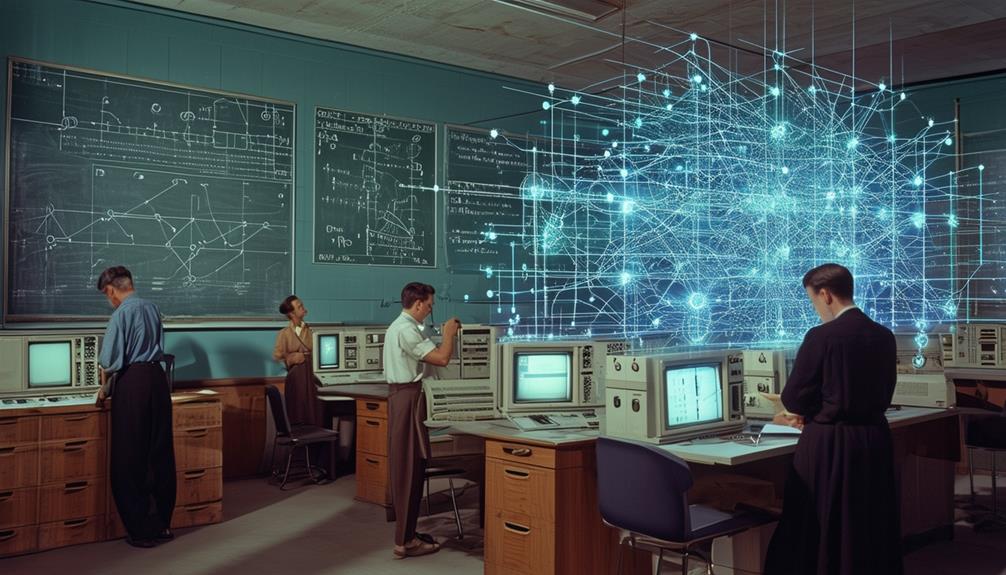

Rosenblatt's pioneering work with perceptrons revolutionized AI research, laying the foundation for future neural network advancements. By introducing the perceptron algorithm, Frank Rosenblatt showcased the potential for training artificial neural networks, challenging traditional computing methods and inspiring a new era in neural network development.

Rosenblatt's perceptron algorithm demonstrated how machines could learn from data, marking a significant milestone in AI history. This breakthrough had several profound impacts on AI research, including:

- Ignition of Debates: The introduction of perceptrons sparked intense discussions among researchers about the capabilities and limitations of artificial neural networks.

- New Research Directions: Rosenblatt's work opened up avenues for exploring multi-layer networks and deep learning, shaping the future of AI research.

- Training Techniques: The perceptron algorithm provided a foundational method for training neural networks, influencing subsequent methodologies and practices.

- Milestone Achievements: The emergence of perceptrons marked a pivotal moment in AI, leading to continuous innovations and advancements in neural network development.

Through these contributions, Rosenblatt's perceptrons didn't just change the landscape of AI research; they laid the groundwork for the sophisticated neural networks and deep learning systems we see today.

Decline in Interest

During the late 1960s, enthusiasm for neural networks waned due to the perceptron's limitations and mounting criticisms. Marvin Minsky and Seymour Papert's 1969 book 'Perceptrons' played a significant role in this decline. They meticulously outlined the limitations of perceptrons, particularly their inability to solve problems involving non-linear separability. This issue was a major stumbling block, as perceptrons couldn't handle tasks that required distinguishing between non-linearly separable data.

As researchers began to understand these limitations, optimism surrounding neural networks diminished. Minsky and Papert's critiques highlighted that perceptrons couldn't solve complex problems, which led many in the field to question the viability of neural networks as a whole. Consequently, funding for neural network research started to dry up. Investors and academic institutions alike were hesitant to support a technology that seemed to have hit a significant roadblock.

This period of skepticism and reduced investment contributed to what is now known as the AI winter, a time of decreased interest and funding in AI research. The perceived shortcomings of perceptrons, exacerbated by Minsky and Papert's criticisms, were pivotal in triggering this downturn.

Legacy and Future Influence

The groundwork laid by perceptrons continues to shape the trajectory of modern neural networks and deep learning technologies. Frank Rosenblatt's perceptron algorithm is foundational to many advancements in AI, sparking interest in neural networks and leading to today's sophisticated models.

Modern neural networks, such as deep feedforward neural networks, owe much to the legacy of the perceptron. These models have benefited from the concepts of neural plasticity and credit assignment, both central to the perceptron algorithm. The influence of perceptrons stretches beyond their original scope, impacting diverse aspects of AI research and development.

- Neural Plasticity: Inspired by how biological neurons adapt, this concept aids in designing flexible and efficient neural networks.

- Credit Assignment: Originating from perceptron studies, this process adjusts weights to minimize errors, crucial for model training.

- Deep Feedforward Neural Networks: These advanced networks are direct descendants of the perceptron, showcasing its enduring legacy.

- AI Technologies: From voice recognition to autonomous driving, perceptron principles underpin many modern applications.

The perceptron's legacy and future influence continue to drive innovations in artificial intelligence, ensuring Rosenblatt's work remains relevant.

Conclusion

Perceptrons revolutionized the field of artificial intelligence in the 1960s despite their early limitations. Frank Rosenblatt's pioneering work laid the groundwork for today's advanced neural networks and deep learning systems. Although interest in perceptrons waned temporarily, their influence persists, driving ongoing research and innovation. Ultimately, perceptrons ignited a spark that continues to propel AI forward, shaping the transformative technologies we see today.