The Rise of Expert Systems in AI During the 1970S

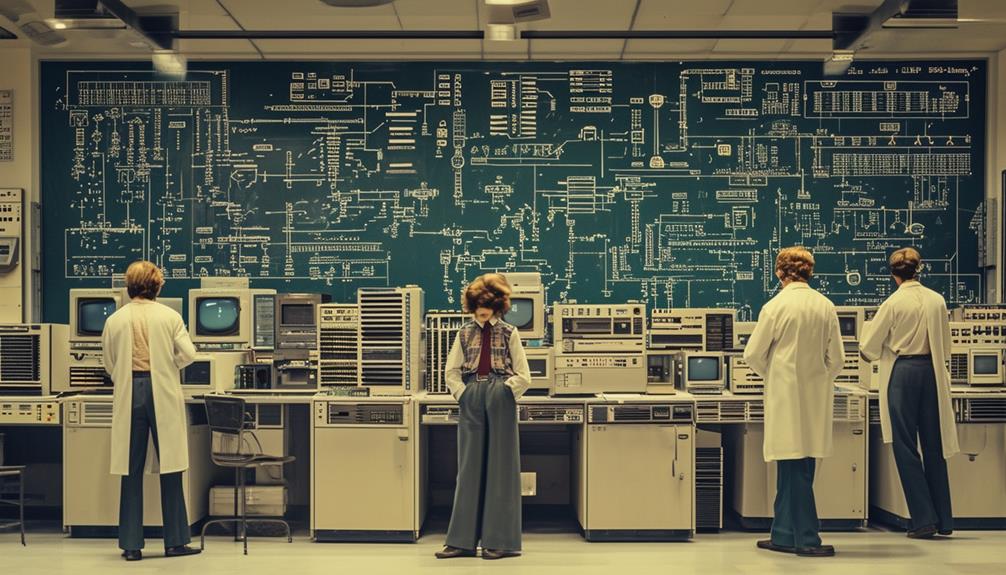

Imagine you're in the 1970s, a time when the idea of computers making expert decisions seemed almost like science fiction. This period saw the emergence of expert systems in AI, thanks to visionaries like Edward Feigenbaum and Joshua Lederberg. These systems, built on rule-based logic, aimed to replicate human expertise in various fields. Projects like DENDRAL and MYCIN demonstrated how machines could analyze data and provide solutions in specialized domains. But what exactly made these systems function, and how did they overcome the technological limitations of their time?

Early Beginnings

In the 1970s, pioneers like Edward Feigenbaum and Joshua Lederberg initiated the development of expert systems aimed at replicating human decision-making within specific domains. This era marked a significant evolution in artificial intelligence, as researchers concentrated on specialized AI applications to address complex problems. Their work culminated in groundbreaking systems like DENDRAL and MYCIN, which showcased AI's potential in domains requiring extensive human expertise.

Expert systems of this time relied heavily on rule-based logic, using 'if-then-else' constructs to emulate human reasoning. These constructs formed the core of the system's decision-making process, enabling it to tackle intricate scenarios with accuracy. A pivotal component was the inference engine, which facilitated the system's ability to derive insights from the established rules. By mirroring the decision-making processes of human experts, these systems offered valuable perspectives and solutions.

Edward Feigenbaum's contributions were particularly noteworthy; he was a strong advocate for the use of specialized AI applications. His work, along with Lederberg's, laid the foundational framework for what would become transformative technology in various domains. The 1970s thus emerged as a crucial decade for expert systems, setting the stage for future advancements in artificial intelligence.

Key Components

In examining the key components of expert systems from the 1970s, three fundamental elements stand out: the knowledge base, the inference engine, and the user interface. The knowledge base contained structured information, while the inference engine utilized logical rules to derive conclusions. The user interface enabled users to interact with the system, input queries, and receive expert-level advice.

Knowledge Base Structure

A well-structured knowledge base forms the backbone of expert systems, organizing information through classes, subclasses, and instances. Classes group related information, while subclasses refine these classifications to capture more specific details. For example, in a medical diagnosis system, you might have a class for diseases, subclasses for respiratory and cardiovascular diseases, and instances for specific conditions like asthma or hypertension.

Knowledge bases don't just categorize data; they also store essential rules and facts necessary for the system's functioning. These rules define how different pieces of information are connected and how the inference engine should process them to make decisions. For instance, a rule might specify that if a patient has a persistent cough and shortness of breath, the system should consider asthma as a possible diagnosis.

Expert systems rely heavily on domain experts to curate and update the knowledge base, ensuring it remains accurate and relevant. This continuous updating process is critical for the system's performance and reliability. By effectively organizing and managing the knowledge base, you can ensure that your expert system delivers precise and trustworthy advice or solutions.

Inference Engine Functionality

The inference engine is the core component of expert systems, responsible for evaluating and applying rules from the knowledge base to make informed decisions. It ensures accurate outcomes through logical reasoning and systematic rule processing. The inference engine determines the sequence of rules necessary to solve problems or answer queries, making it essential for the expert system's efficiency and effectiveness.

Key functionalities of the inference engine include:

- Rule Evaluation: It examines each rule in the knowledge base to determine its applicability to the current situation.

- Logical Reasoning: The engine employs logical reasoning to connect various pieces of information, ensuring coherent and rational decisions.

- Sequence Determination: It identifies the optimal sequence for applying rules, which is crucial for achieving the correct outcome.

- Techniques: Common techniques such as forward chaining and backward chaining enhance the engine's ability to process information efficiently.

In forward chaining, the inference engine starts with available data and applies rules to derive new data until a goal is achieved. In backward chaining, it begins with a goal and works backward to identify the rules and data that support that goal. The performance of your expert system relies heavily on the efficiency and accuracy of its inference engine, making it a pivotal component.

User Interface Design

Designing an effective user interface for expert systems is crucial for ensuring that these complex tools are both accessible and functional. An intuitive and user-friendly interface enables users to navigate through the system's functionalities effortlessly, facilitating data input, result retrieval, and decision-making processes.

A clear and logical layout is essential for easy navigation. Visual representations such as charts and graphs can simplify data comprehension and enhance decision support. Interactive elements and user-friendly controls are pivotal, offering immediate feedback and making the system more engaging and easier to use.

An effective user interface goes beyond aesthetics; it significantly boosts user engagement and efficiency. Well-designed, intuitive controls prevent users from feeling overwhelmed, leading to better decision-making and a more satisfying user experience.

Symbolic Reasoning

When exploring symbolic reasoning in expert systems, you will encounter knowledge representation methods that use symbols to encode information. Rule-based systems, with their 'if-then' constructs, form the core of these methods, enabling the system to simulate human decision-making. This approach allows expert systems to effectively tackle complex problems and provide clear, understandable explanations for their decisions.

Knowledge Representation Methods

In the 1970s, expert systems in AI primarily utilized symbolic reasoning to encode and manipulate domain-specific knowledge through logic-based representations. These methods were crucial for structuring information so that machines could process and comprehend it. Symbolic reasoning enabled the modeling of complex relationships and rules, thereby facilitating decision-making processes within expert systems.

Here's a breakdown of the approach:

- Encoding Knowledge: Domain-specific knowledge was represented as rules, facts, and relationships, allowing the system to grasp the intricacies of a particular field.

- Logic-Based Representations: Logic served as the foundation, ensuring that the encoded knowledge could be systematically manipulated to derive conclusions and make decisions.

- Reasoning and Inference: With symbolic logic, expert systems could perform reasoning tasks, inferring new information from existing data.

- Domain-Specific Efficiency: These methods were optimized for specific domains, making expert systems highly specialized and efficient in addressing particular problems.

Through these techniques, symbolic reasoning in expert systems significantly advanced the automation of complex decision-making processes across various fields. The focus on logic-based representations ensured that knowledge was not only stored but also intelligently utilized, maintaining semantic accuracy, completeness, consistency, conciseness, relevance, interoperability, and trustworthiness.

Rule-Based Systems

Building on the foundation of symbolic reasoning, rule-based systems in the 1970s replicated human decision-making through if-then-else constructs and inference engines. These systems were crucial in early AI applications, enabling computers to tackle complex problem-solving tasks. By utilizing symbolic reasoning, rule-based systems could manipulate symbols and logic to derive conclusions, much like a human expert.

The essence of these systems was their use of if-then-else constructs, which allowed them to apply specific rules to given scenarios. For example, an expert system might diagnose medical conditions by following a set of predefined rules: if the patient has a fever and a cough, then consider flu; otherwise, consider alternative conditions. This explicit rule-based reasoning facilitated clear and understandable decision-making processes.

Inference engines were vital in processing these rules and drawing logical conclusions. They supported knowledge representation by efficiently organizing and applying the rules. This approach not only made the decision process transparent but also allowed for easy updates and modifications to the rule set. Rule-based systems illustrated how symbolic reasoning could be leveraged to emulate expert-level decision-making in the early stages of AI development.

Rule-Based Systems Revolution

How did rule-based systems revolutionize expert systems in the 1970s? They fundamentally transformed the landscape of AI by introducing a structured way to emulate human expertise. Rule-based systems utilized 'if-then-else' constructs to make decisions based on predefined rules, simulating the decision-making process of domain experts. These rules were meticulously crafted and maintained by domain experts to ensure accuracy and relevance.

The innovation lay in the use of inference engines, which applied these predefined rules to the given data, deducing outcomes or recommendations. This method provided a reliable way to capture and automate the expertise of professionals across various fields. Here's how:

- Structured Approach: Rule-based systems provided a clear, organized method for problem-solving, making them easier to understand and manage.

- Reliability: By adhering to predefined rules, these systems ensured consistent and dependable outputs.

- Scalability: They facilitated the development of more complex and specialized expert systems in diverse fields.

- Efficiency: Inference engines enabled quick and efficient data processing, leading to faster decisions and recommendations.

Notable Examples

Let's delve into some notable examples that illustrate how rule-based systems revolutionized expert systems in the 1970s. Initially, there's DENDRAL, an early expert system developed in the late 1960s. It was groundbreaking in assisting organic chemists by interpreting mass spectrometry data to hypothesize molecular structures. This system highlighted the practical applications of AI in specialized domains like chemistry.

Then came MYCIN in the early 1970s, designed to diagnose bacterial infections. MYCIN used patient history, symptoms, and bacterial resistance patterns to suggest treatments. Its ability to make accurate medical diagnoses underscored the power of expert systems in the healthcare domain.

Another significant example is XCON, also known as R1. XCON was developed to configure computer systems, utilizing expert knowledge to streamline decision-making processes. This system was particularly influential in demonstrating how AI could be applied in the computing industry, a specialized domain with complex requirements.

These expert systems—DENDRAL, MYCIN, and XCON—showcased the transformative potential of AI. Their success not only provided practical applications in their respective fields but also spurred considerable investment and further research in AI, cementing the role of expert systems in different specialized domains.

Technological Advances

Technological advances in the 1970s, particularly the advent of powerful computers and the development of programming languages like LISP and Prolog, were instrumental in the evolution of expert systems. These technologies enabled the creation of complex rule-based logic and inference engines, essential for replicating human decision-making processes. Expert systems drew their intelligence from domain-specific knowledge representation, relying on detailed, specialized information.

Here's how these advances contributed to the development of expert systems:

- Powerful Computers: Enhanced computing capabilities made it feasible to process intricate rule-based logic and manage extensive data sets.

- Programming Languages: Languages such as LISP and Prolog provided robust tools for building inference engines and handling symbolic reasoning.

- Knowledge-Based Approaches: Researchers embedded human expertise into systems, allowing them to perform specialized tasks with proficiency.

- Domain-Specific Knowledge Representation: Expert systems excelled in specialized fields due to precise and detailed knowledge inputs.

These technological strides were crucial. Without the advancements in computing power, programming languages, and knowledge-based approaches, the emergence of expert systems would not have been possible.

Challenges Faced

Developing expert systems in the 1970s presented several significant challenges that impacted their growth and effectiveness. One major issue was the knowledge acquisition bottleneck. Extracting and encoding domain expertise was a time-consuming process, limiting the scalability of expert systems. Capturing the nuanced knowledge of experts was not straightforward and demanded extensive effort.

Another critical challenge was the limited learning capability of these systems. Expert systems couldn't easily adapt to new or evolving scenarios, constraining their effectiveness in dynamic environments. This lack of adaptability also meant they struggled with handling complex situations that weren't initially programmed into them.

Maintenance complexity was another significant hurdle. Updating and refining the knowledge base required significant effort, often necessitating the involvement of skilled professionals. This made the regular upkeep of expert systems both time-consuming and costly.

Interpretability issues further complicated matters. Many expert systems had opaque decision-making processes, making it difficult for users to trust and accept their recommendations. Additionally, the adaptability limitations restricted their long-term usability. As the environment or domain changed, these systems often couldn't keep pace, reducing their effectiveness over time.

Legacy and Influence

Expert systems from the 1970s, such as DENDRAL and MYCIN, revolutionized decision-making by simulating human expertise in specialized fields. These systems marked a pivotal moment in AI history, demonstrating how computers could replicate human reasoning and problem-solving capabilities. By leveraging rule-based logic and extensive knowledge bases, these early expert systems paved the way for modern AI applications.

The legacy of these pioneering systems is profound:

- Foundation for Rule-Based Logic: They established the importance of rule-based logic in AI, which remains a cornerstone in developing intelligent systems.

- Enhanced Decision-Making Processes: Their ability to simulate human expertise in decision-making processes has had a lasting influence on industries such as medicine and chemistry.

- Knowledge Base Development: They demonstrated the value of creating vast knowledge bases to facilitate accurate and reliable AI-driven solutions.

- Catalyzed AI Investments: The success of expert systems led to increased investments in AI research and practical applications across diverse sectors.

The influence of these systems is evident in modern AI technologies that rely on structured knowledge and logical frameworks. Expert systems showed the world that machines could process data and make informed decisions, pushing the boundaries of AI's capabilities. This legacy continues to inspire advancements in AI, shaping the technologies we rely on today.

Future Prospects

As we look to the future, integrating expert systems with modern AI technologies promises to bring forth new levels of performance and capability. The foundational work by AI pioneers like Alan Turing laid the groundwork, but today's landscape offers unprecedented opportunities. By combining the rule-based logic of expert systems with the adaptive learning capabilities of machine learning, powerful, integrated solutions can be created that surpass the limitations of each approach individually.

Ongoing innovation is vital to navigating the evolving AI landscape. The development of hybrid systems that utilize both machine learning and expert systems can address complex challenges more effectively. Imagine systems that not only draw from vast data sets but also apply expert knowledge to make informed decisions in real-time. This blend could revolutionize industries ranging from healthcare to finance.

Exploring new applications where expert systems can provide valuable decision support is crucial. Continuous research is already underway to improve the efficiency and capabilities of these systems, ensuring they remain relevant and robust. As artificial intelligence advances, the integration of these technologies will be key to realizing their full potential, driving growth, and delivering smarter, more efficient solutions.

Conclusion

The 1970s laid the groundwork for AI with the development of expert systems. These systems, driven by rule-based logic and symbolic reasoning, transformed decision-making in specialized fields such as medicine, engineering, and finance. While challenges existed, the technological advancements of this era have left a lasting impact, shaping modern AI innovations. Today's AI advancements are built upon the pioneering efforts of early expert systems, indicating that the evolution of AI is far from complete.