The Evolution of Neural Networks and Backpropagation During the 1980s

Imagine you're in the 1980s, witnessing transformative breakthroughs in neural networks that would redefine artificial intelligence. John Hopfield introduced bidirectional lines, offering novel solutions for associative memory tasks. This was followed by Reilly and Cooper's hybrid networks, which combined various techniques to enhance performance. However, it was the introduction of the backpropagation algorithm by Rumelhart, Hinton, and Williams that truly revolutionized the field, effectively solving the longstanding problem of weight updates in multi-layer networks. Curious about how these developments reshaped the AI landscape? Let's delve into the intricate details and their lasting impact.

Early Concepts

In the early 1980s, John Hopfield introduced bidirectional lines, revolutionizing neural network concepts. This innovation enabled information to travel in both directions between nodes, creating more dynamic and efficient networks. Early neural networks were characterized by the use of deep and multiple layers, foundational concepts that underpin today's intricate architectures. Researchers like Reilly and Cooper capitalized on these ideas, employing hybrid networks with multiple layers to expand the capabilities of neural networks.

The concept of bidirectional lines was not just a technical breakthrough; it also facilitated the development of the backpropagation algorithm. This algorithm became essential for training deep learning models by minimizing errors across multiple layers. With backpropagation, neural networks could be fine-tuned for greater accuracy and power, marking a significant advancement in the field.

The early 1980s were transformative for neural networks, thanks to these foundational concepts. They laid the groundwork for the rapid advancements that followed, making neural networks integral to modern technology. Understanding these early concepts is crucial for appreciating the progress made in the field.

Key Contributors

The 1980s were marked by groundbreaking contributions from pioneering researchers like John Hopfield, David Rumelhart, and Geoffrey Hinton. Their landmark publications and influential algorithms, such as the backpropagation algorithm, set the stage for modern neural networks. By exploring their work, you'll understand the foundational advancements that propelled neural network research forward.

Pioneering Researchers

The 1980s marked a significant era in the evolution of neural networks, driven by the pioneering efforts of John Hopfield, Reilly and Cooper, and David Rumelhart.

John Hopfield introduced the concept of bidirectional associative memory in 1982, which was pivotal in demonstrating how neural networks could store and retrieve information. His models laid crucial groundwork for future advancements in the field.

Reilly and Cooper contributed by experimenting with hybrid neural networks featuring multiple layers. Their early 1980s work significantly expanded the understanding and capabilities of neural networks, pushing the boundaries of what these systems could achieve.

David Rumelhart, along with his team, made a groundbreaking contribution in 1986 with the development of the backpropagation algorithm. This innovation allowed for the efficient distribution of errors in pattern recognition tasks, enabling deeper neural networks to learn complex representations. Despite requiring more iterations and slower learning, backpropagation quickly became a focal point of research.

| Pioneer | Contribution |

|---|---|

| John Hopfield | Bidirectional associative memory (1982) |

| Reilly and Cooper | Hybrid neural networks with multiple layers |

| David Rumelhart | Development of backpropagation algorithm (1986) |

The contributions of these researchers were vital to the rapid evolution of neural networks during the 1980s.

Landmark Publications

David Rumelhart's 1986 publication on backpropagation networks marks a pivotal moment in neural network research. This landmark work revolutionized the training of multi-layer networks, making significant advances in pattern recognition and deep learning. Before this breakthrough, training algorithms struggled to efficiently distribute errors across layers. Rumelhart and his team addressed this issue by developing the backpropagation algorithm, which effectively updated weights and allowed networks to learn through multiple iterations.

Thanks to backpropagation, neural networks were no longer limited to shallow architectures. Instead, deeper networks capable of recognizing complex patterns and representations became feasible. This advancement was crucial for developing sophisticated applications in fields like computer vision and natural language processing. The ability to efficiently train multi-layer networks paved the way for deep learning, setting the stage for many modern AI achievements.

Influential Algorithms

Building on Rumelhart's groundbreaking work, the 1980s saw the emergence of several influential algorithms, driven by key contributors like John Hopfield. Hopfield's pioneering bidirectional associative memory model utilized a recurrent neural network approach, revolutionizing memory associations in neural networks. His concept of bidirectional lines created a novel way to handle data flow, significantly impacting the field.

Another game-changer was the backpropagation algorithm, developed by David Rumelhart, Geoffrey Hinton, and Ronald Williams in 1986. This algorithm efficiently updated weights in multi-layer networks, addressing the challenge posed by extending the Widrow-Hoff rule to multiple layers. It enabled distributed pattern recognition and error correction, allowing networks to learn complex representations through numerous iterations.

Before the widespread adoption of backpropagation, hybrid networks with multiple layers were common but less efficient. The backpropagation algorithm's efficiency and learning capabilities led to its dominance. Contributions from these key figures, coupled with international collaborations like the US-Japan conference on neural networks and Japan's Fifth Generation project, spurred renewed interest and significant advancements in neural networks during the 1980s.

| Key Contributor | Major Contribution |

|---|---|

| John Hopfield | Bidirectional associative memory model |

| David Rumelhart | Development of the backpropagation algorithm |

| Geoffrey Hinton | Co-developer of the backpropagation algorithm |

| Ronald Williams | Co-developer of the backpropagation algorithm |

| Widrow-Hoff | Rule extension highlighting the need for improved methods |

This period was marked by groundbreaking work that laid the foundation for modern neural network research.

Hopfield Networks

Hopfield Networks operate on the principle of energy minimization, guiding the system towards stable states. These networks are particularly effective in associative memory tasks, as they can retrieve stored patterns from partial or noisy inputs. Their stability and convergence properties provide crucial insights into neural systems' capabilities for reliable pattern recognition.

Energy Minimization Principle

Hopfield networks, developed in the 1980s, leverage the energy minimization principle to perform associative memory tasks effectively. Unlike traditional backpropagation methods, these networks are a form of recurrent neural network that use energy minimization to identify stable states, representing stored patterns. They utilize binary threshold units and symmetric connections to ensure the system's global energy decreases over time, allowing the network to converge to one of these stable states.

Key aspects of Hopfield networks include:

- Energy Minimization: The core mechanism involves minimizing an energy function, guiding the network to stable states.

- Associative Memory: They excel in retrieving stored patterns from partial or corrupted inputs.

- Binary Threshold Units: These units simplify the network's structure and computations.

- Symmetric Connections: Symmetry in connections ensures stability and effective pattern recognition.

Associative Memory Mechanism

Understanding the associative memory mechanism of Hopfield Networks elucidates how these systems store and retrieve information through energy minimization. Introduced by John Hopfield in the 1980s, Hopfield Networks are foundational in associative memory modeling. They employ recurrent connections, where each neuron is bidirectionally connected to others, enabling the network to store and recall patterns effectively.

When a pattern is input into a Hopfield Network, the network transitions to a stable state via energy minimization. This process adjusts the network to reach a low-energy state that corresponds to a stored pattern. Essentially, Hopfield Networks store patterns by embedding these stable states within their structure. When presented with a noisy or partial version of a stored pattern, the network utilizes its energy minimization process to reconstruct and recall the original pattern.

This pattern recall capability stems from the network's design, allowing it to recognize and reconstruct stored information. The associative memory mechanism of Hopfield Networks is crucial for understanding how neural networks can perform memory and pattern recognition tasks, paving the way for more advanced models in the future.

Stability and Convergence Theory

To understand the robustness of Hopfield Networks, it is essential to investigate their stability and convergence theories, which ensure these networks reliably reach stable states. Stability theory in Hopfield networks ensures that even with noise or varying initial conditions, the network will settle into one of its stable states. This is crucial for applications in associative memory tasks where the network recalls a memory pattern from partial or noisy inputs.

Convergence theory complements stability theory by proving that the iterative dynamics of Hopfield networks lead to these stable states. Essentially, regardless of the network's starting point, it will eventually converge to a stable configuration. This makes Hopfield networks particularly powerful in demonstrating the capabilities of neural networks in distributed representations and content-addressable memory.

To emphasize the importance of these theories, consider the following points:

- Stability Theory: Ensures the network reaches consistent states despite disturbances.

- Convergence Theory: Guarantees the network's iterative process leads to a stable state.

- Associative Memory: Hopfield networks excel in recalling complete patterns from partial inputs.

- Extended Models: The Bidirectional Associative Memory (BAM) model enhances Hopfield networks by enabling bidirectional recall.

Hybrid Networks

Hybrid networks, pioneered by researchers like Reilly and Cooper in the 1980s, integrated different neural network architectures to create more powerful and flexible models. By combining elements from various neural networks, they utilized multiple layers more effectively, leading to improved performance across diverse tasks. This innovative approach garnered significant attention, particularly during the US-Japan conference on Cooperative/Competitive Neural Networks, where discussions about hybrid networks flourished.

Hybrid networks can be seen as a fusion of the best features from different neural architectures. Reilly and Cooper's work paved the way for more complex and versatile neural network structures. These networks were not merely incremental improvements but marked a significant leap forward, demonstrating that combining diverse approaches could yield models that were more powerful and adaptable to a wide range of problems.

The impact of these early hybrid networks was profound. They laid the groundwork for further advancements in neural network research and applications, setting the stage for the sophisticated models we see today. Their legacy continues to influence current AI research, proving that sometimes, the best solutions come from integrating diverse ideas.

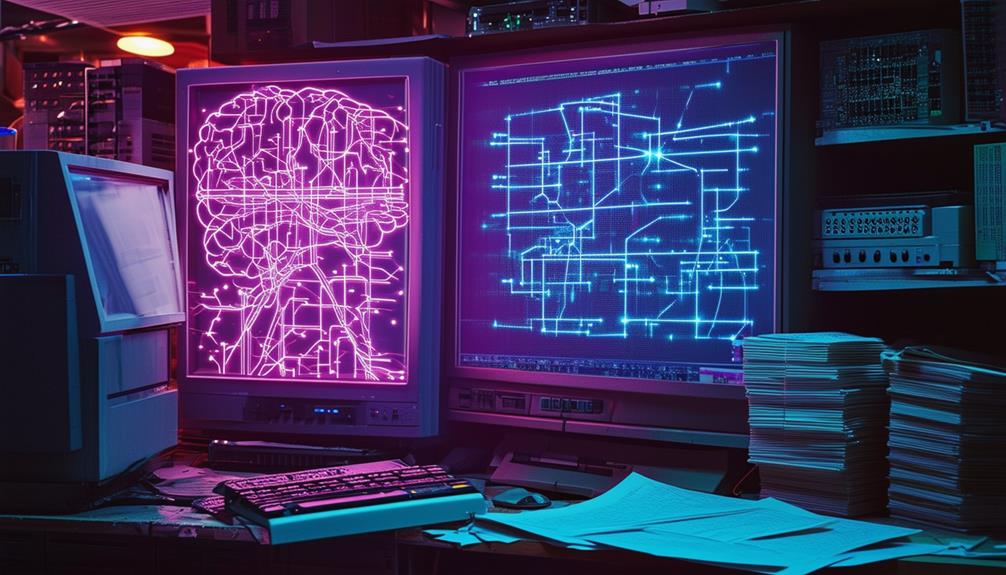

Development of Backpropagation

The development of backpropagation in the late 1980s represented a significant advancement in neural network research. This algorithm fundamentally transformed the training process for neural networks by efficiently updating weights in multi-layer structures. By doing so, it addressed challenges like the vanishing gradient problem, allowing deeper networks to learn complex representations.

Backpropagation operates by propagating errors backward through the network and adjusting weights to minimize the global error. This method greatly enhanced the speed and accuracy of training neural networks. Prior to backpropagation, training multi-layer networks was both cumbersome and inefficient.

The critical contributions of backpropagation include:

- Efficiency: The algorithm enabled faster and more precise weight updates.

- Scalability: It facilitated the training of deeper, more complex neural networks.

- Error Minimization: Errors were propagated backward, leading to more accurate weight adjustments.

- Complex Representations: It allowed multi-layer networks to learn intricate patterns and representations.

Training Challenges

Despite its advantages, backpropagation faced significant training challenges that slowed learning rates and made the process computationally intensive. When working with multi-layer networks in the 1980s, practitioners often found themselves stuck in an arduous cycle of numerous iterations needed to adjust the weights properly. This was mainly because the complexity of these networks demanded extensive computational power, which was quite limited during that era.

One of the most notorious issues encountered was the vanishing gradient problem. As deeper networks were trained, the gradients of the loss function would diminish exponentially, making it incredibly difficult to update the weights in the initial layers. This meant that learning long-range dependencies in data sequences became nearly impossible, causing networks to struggle with tasks requiring such comprehension.

To efficiently train multi-layer networks with backpropagation, advancements in computational power and optimization techniques were necessary. Without these, the slow learning rates severely hindered the network's ability to converge to a useful solution within a reasonable timeframe. The training challenges posed by backpropagation in the 1980s highlighted the need for more robust methods and hardware to fully harness the potential of neural networks.

Impact on AI

The introduction of backpropagation in the 1980s was a watershed moment for AI, revolutionizing the way neural networks learn from data. By enabling efficient weight updates in multi-layer networks, backpropagation addressed critical challenges such as the vanishing gradient problem, thus facilitating the learning of complex representations in deeper networks.

The impact on AI was significant, leading to several key advancements:

- Accelerated Training: Backpropagation made the training process faster, allowing researchers to handle larger datasets and tackle more complex problems efficiently.

- Enhanced Accuracy: The algorithm systematically fine-tuned weights, thereby improving model accuracy across a wide array of tasks.

- Advanced Model Development: The capacity to train multi-layer networks paved the way for sophisticated models capable of solving intricate challenges in fields like computer vision and natural language processing.

- Increased Research Activity: The success of backpropagation spurred renewed interest in neural network research, leading to the establishment of new conferences and societies focused on advancing AI technologies.

These advancements highlight the pivotal role of backpropagation in shaping modern AI, enabling the creation of more accurate, efficient, and complex models. As we trace the evolution of AI, it becomes clear that backpropagation was a foundational breakthrough, setting the stage for the sophisticated technologies we depend on today.

Future Directions

As we look ahead, the future of neural networks hinges on advancements in hardware development and cutting-edge chip technologies. Continued innovation in integrated circuits is vital for enhancing the efficiency and performance of these systems. The creation of silicon compilers for optimized circuits remains a pivotal focus, playing a key role in providing the computational power required for advanced machine learning and artificial intelligence applications.

Research and development in digital, analog, and optical chip technologies are imperative for the evolution of neural networks. Analog signals that mimic brain neurons offer promising improvements in functionality, expanding the potential of neural networks. This approach could lead to more efficient and powerful AI systems.

The adoption of commercial optical chips for neural network applications is another exciting prospect. Although these technologies are still in their developmental phase, their integration could revolutionize the field by offering faster and more efficient processing capabilities. The collaboration between hardware and software development will be crucial in shaping the future of neural networks, driving breakthroughs in AI and machine learning to new heights.

Conclusion

The 1980s marked a transformative era for neural networks, driven by groundbreaking contributions from pioneers like Hopfield, Reilly, Cooper, and the backpropagation team of Rumelhart, Hinton, and Williams. These advancements addressed significant training challenges and established the foundation for modern deep learning. Their impact on AI has been profound, paving the way for future innovations in neural network architectures and applications. While much progress has been made, the journey continues, promising even more exciting developments ahead.