The Development of the First Neural Networks

You're about to explore how Warren McCulloch and Walter Pitts's pioneering work in 1943 laid the foundation for neural networks, profoundly influencing artificial intelligence. Their collaboration introduced threshold logic, a cornerstone for future advancements. Additionally, Donald Hebb's principles of Hebbian learning in 1949 added new dimensions to the field. As you delve into these early developments, you'll uncover how these foundational ideas evolved over the decades, facing numerous challenges and setbacks. What key breakthroughs followed?

Early Beginnings

The development of neural networks began with Warren McCulloch and Walter Pitts' 1943 model, which used electrical circuits to create a simple yet groundbreaking neural network. This foundational work inspired further exploration into how neural networks could mimic the human brain's functionality.

In 1949, Donald Hebb introduced the concept of Hebbian learning, emphasizing the importance of strengthening neural pathways through repeated use. This notion was crucial in understanding how neural networks could learn and adapt over time. Hebb's theoretical framework significantly influenced subsequent research and the development of more advanced neural network models.

In the 1950s, Nathanial Rochester made an initial attempt to simulate a neural network, although he encountered considerable challenges. Substantial progress came in 1959 with Bernard Widrow and Marcian Hoff's introduction of ADALINE (Adaptive Linear Neuron) and MADALINE (Multiple ADALINE). These models represented a significant leap in neural network development.

Threshold Logic

In the 1950s, Frank Rosenblatt introduced Threshold Logic, which revolutionized neural network functionality with its binary classification approach. By employing threshold activation functions, Rosenblatt simplified how neural networks process information. Essentially, Threshold Logic determines whether input signals exceed a certain threshold, leading to a binary decision—either a 0 or a 1. This method is foundational for binary classification tasks.

Rosenblatt's pioneering work extended further when he developed the perceptron, a single-layer neural network that utilized Threshold Logic to perform simple yet powerful computations. The perceptron could take various input signals, process them through weighted connections, and apply a threshold function to produce an output. This model demonstrated that neural networks could be used for pattern recognition, marking a significant advancement in artificial intelligence.

With Threshold Logic, Rosenblatt laid the groundwork for early neural networks and their ability to recognize patterns in data. The perceptron showed that machines could make decisions based on input signals, a vital capability for more complex neural network architectures that would follow. Therefore, Threshold Logic played a crucial role in the evolution of neural networks and their application in diverse fields.

Hebbian Learning

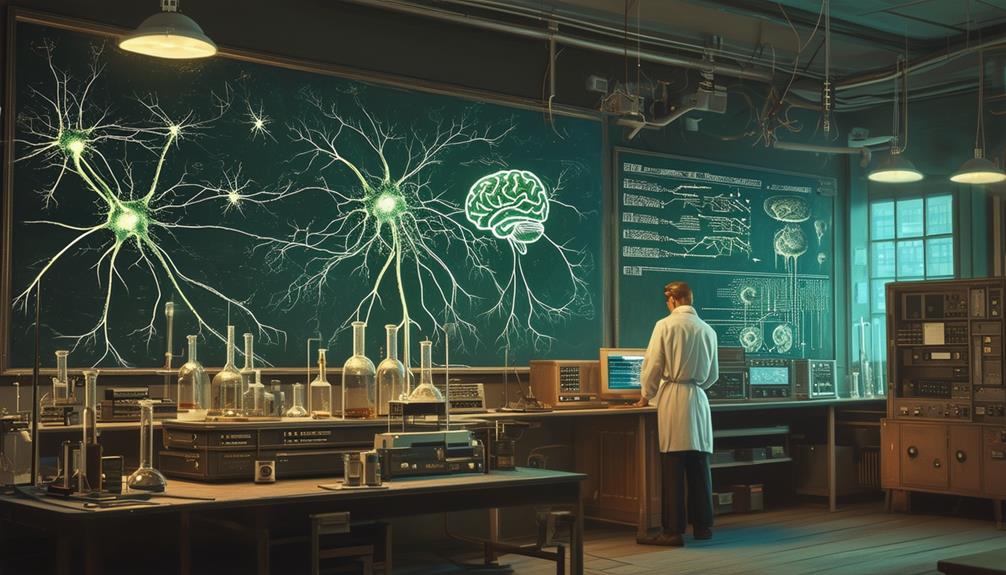

Hebbian learning is captivating because it explains how synaptic weights change through repeated neuron activity. This principle, often summarized as "neurons that fire together wire together," forms the basis of learning through association. Grasping these neuron activity patterns is crucial for understanding neural network development.

Synaptic Weight Changes

Donald Hebb's groundbreaking theory of Hebbian learning posits that synaptic connections strengthen when neurons fire together. This principle, often summarized by the phrase 'cells that fire together wire together,' is crucial for understanding how neural networks adapt and learn from patterns. Strengthened synaptic connections underlie our brain's ability to process information, making learning and memory possible.

In neural networks, Hebbian learning provides a mechanism for synaptic weight changes. Simultaneous firing of neurons makes their connections more robust, allowing the network to encode information and enhance performance over time. Essentially, the network learns by adjusting its weights based on encountered patterns.

Hebbian learning is not merely a theoretical concept; it is a foundational principle in neuroscience and artificial intelligence. It has profoundly influenced the design of modern neural networks, which handle complex tasks by mimicking the brain's natural learning processes. Understanding and applying Hebbian learning elucidates how synaptic weight changes drive the adaptability and efficiency of neural networks.

Learning Through Association

Hebbian Learning, introduced in 1949 by Donald Hebb, revolutionized our understanding of neural pathway strengthening through simultaneous activation. This groundbreaking concept posits that when two neurons fire simultaneously, their connection is reinforced. Essentially, it is an associative learning process where correlated neuronal activities bolster synaptic connections.

This learning rule is crucial for how neural networks adapt from experience and form memories. By emphasizing the importance of timing and correlation, Hebbian Learning laid the foundation for numerous advancements in artificial neural networks and our comprehension of brain function. The principle is often summarized as 'cells that fire together, wire together,' underscoring the significance of synaptic plasticity.

Key points about Hebbian Learning include:

- Associative Learning: Reinforcement of neural pathways through correlated activities.

- Synaptic Plasticity: Strengthening of synapses based on simultaneous activation.

- Memory Formation: Critical for understanding how experiences shape neural networks.

- Foundational Principle: Integral to advancements in artificial neural networks.

- Simple yet Powerful: A straightforward learning rule with profound implications.

Neuron Activity Patterns

How do neurons synchronize their activity to strengthen their connections and facilitate learning? The answer lies in Hebbian learning, a theory proposed by Donald Hebb in 1949. According to this theory, neurons strengthen their connections when they are active simultaneously. Simply put, neurons that fire together, wire together. This synchrony influences memory formation and various learning processes, laying the groundwork for neural plasticity.

Neural plasticity refers to the brain's ability to modify synaptic connections based on activity patterns. When two neurons frequently activate at the same time, their connection becomes stronger, making future communication easier. This mechanism is crucial for understanding how learning and memory function at a cellular level.

Hebbian learning isn't just a biological phenomenon; it also forms the foundation for artificial neural networks. These networks mimic the brain's learning mechanisms by adjusting the strength of connections based on activity patterns. By implementing Hebbian learning principles, artificial neural networks can simulate human-like learning processes, driving advancements in machine learning and artificial intelligence.

The Perceptron

Frank Rosenblatt's introduction of the Perceptron in 1957 marked a significant milestone in the development of artificial neural networks. As a pioneering model, the Perceptron consisted of a single layer of interconnected neurons with adjustable weights, allowing it to classify inputs into two distinct categories by learning from labeled training data.

The Perceptron was designed to recognize and learn simple linear patterns, enabling it to make binary decisions. This capability transformed how machines could learn from data. Key features of the Perceptron include:

- Single layer: A straightforward architecture featuring one layer of neurons.

- Adjustable weights: The ability to modify connection weights for improved accuracy.

- Labeled training data: Learning from examples with known outcomes.

- Linear patterns: Proficiency in identifying and learning linear relationships in data.

- Binary decisions: The ability to classify inputs into one of two categories.

Rosenblatt's Perceptron laid the groundwork for future advancements in neural networks, demonstrating the potential for machines to learn and adapt.

Challenges and Setbacks

The early development of neural networks faced significant challenges, ranging from non-differentiable learning functions to exaggerated claims that led to funding cuts. When researchers attempted to simulate the initial neural network in the 1950s, they quickly encountered numerous obstacles. One of the primary issues was the flawed learning functions that lacked differentiability, making it difficult for the networks to learn effectively. These flawed learning procedures highlighted fundamental setbacks in the initial stages of neural network research.

Additionally, exaggerated promises about the capabilities of neural networks created unrealistic expectations. When these promises weren't met, funding and research efforts were drastically reduced, stalling further advancements. The setbacks extended beyond technical issues; philosophical concerns also played a role. The idea of 'thinking machines' sparked fears and ethical debates, hindering progress and implementation of self-programming computers.

Despite these obstacles, the development of ADALINE and MADALINE in 1959 provided a glimmer of hope. These advancements marked a significant milestone, demonstrating that, even with setbacks, progress was possible. The road to the development of neural networks was fraught with challenges, but each obstacle provided valuable lessons for future breakthroughs.

Publication of 'Perceptrons'

The publication of 'Perceptrons' in 1969 by Marvin Minsky and Seymour Papert had a profound impact on AI research. Their analysis of the limitations of single-layer neural networks reshaped the field, highlighting significant challenges and sparking extensive debate. Despite its critical tone, the book laid essential groundwork for future advancements in neural network technology.

Impact on AI Research

The publication of *Perceptrons* in 1969 by Marvin Minsky and Seymour Papert significantly shaped the trajectory of AI research. By highlighting the limitations of single-layer neural networks, their work caused a pivotal shift in focus within the AI community. They emphasized the inadequacies of early neural networks, underscoring the need for more complex models and advanced training methods, which had a profound impact on the direction of AI research.

Initially, their critique led to a temporary decline in neural network research and funding. However, this setback was not without its benefits. The insights from *Perceptrons* ultimately paved the way for breakthroughs in neural network design. Researchers began developing multi-layered networks, which overcame the limitations identified by Minsky and Papert. Additionally, the book spurred the creation of more sophisticated algorithms and training techniques, essential to the advancements seen in modern AI.

The influence of *Perceptrons* on AI research can be summarized as follows:

- Exposed limitations of single-layer neural networks.

- Redirected focus towards complex models and multi-layered networks.

- Inspired novel training methods to enhance network performance.

- Triggered a temporary decline but led to long-term progress.

- Shaped future research directions in AI and machine learning.

Limitations and Critiques

In 1969, the publication of *Perceptrons* by Marvin Minsky and Seymour Papert significantly impacted the trajectory of neural network research. The book critically analyzed the limitations of single-layered neural networks, particularly their inability to tackle complex problems such as those involving non-linear separability, like the XOR problem. This critique, while valid for its time, led to a decline in research funding and interest in neural networks during the 1970s.

Minsky and Papert's analysis, which drew on early work by McCulloch and Pitts, highlighted that single-layer perceptrons were only capable of simple pattern recognition and not more intricate tasks. This led to a perception that Artificial Neural Networks (ANNs) were impractical for complex problem-solving.

Key critiques from *Perceptrons* included:

| Key Critique | Impact | Resulting Effect |

|---|---|---|

| Limited problem-solving capabilities | Reduced research funding | Slowed development of ANNs |

| Inability to handle non-linear problems | Decreased interest in neural networks | Shifted AI research focus |

| Overemphasis on limitations | Increased skepticism in the field | Delayed advancements in learning algorithms |

These critiques were pivotal in shaping the research direction of neural networks during that era.

ADALINE and MADALINE

ADALINE (Adaptive Linear Neuron) and MADALINE (Multiple ADALINE), developed by Bernard Widrow and Marcian Hoff in 1959, revolutionized neural networks by introducing noise elimination and improved signal processing. These models were groundbreaking because they used a learning procedure to adjust weight values based on input data, which significantly enhanced their performance.

ADALINE, the simpler of the two, focused on linear signal processing and noise reduction. It utilized a single-layer neural network to process inputs and adjust weights to minimize error. MADALINE, in contrast, extended this concept to multiple layers, enabling more complex data processing and further noise elimination.

Widrow and Hoff's innovations laid the groundwork for more advanced neural network designs, marking a significant milestone in artificial intelligence. Their contributions demonstrated the practical applications of neural networks, inspiring future research and development.

Key takeaways include:

- ADALINE: A single-layer neural network model.

- MADALINE: An extension of ADALINE with multiple layers.

- Learning Procedure: Adjusts weight values to minimize error.

- Signal Processing: Enhanced through noise elimination.

- Pioneers: Bernard Widrow and Marcian Hoff's foundational work.

These advancements underscored the potential of neural networks, propelling the field forward.

AI Winter

The AI winter was a period marked by stagnation and skepticism in AI research, significantly hindering the progress of neural networks. During this time, exaggerated claims about AI's potential led to unmet expectations, causing funding to dwindle and research efforts to face substantial setbacks. The limited computational power available at the time further exacerbated these challenges, making it difficult to achieve meaningful advancements in neural networks.

This era of AI winter redirected focus away from AI research and development, resulting in a considerable slowdown in neural network progress. The pervasive skepticism about AI capabilities made it challenging for researchers to secure the necessary resources to continue their work. These difficulties delayed the resurgence and evolution of neural networks and other AI technologies.

Here's an overview of the AI winter's impact:

| Issue | Effect | Consequence |

|---|---|---|

| Unmet Expectations | Reduced funding | Limited research capabilities |

| Exaggerated Claims | Increased skepticism | Decreased support for AI |

| Limited Computation | Hindered advancements | Slower progress in neural networks |

| Focus Shift | Away from AI research | Delayed evolution in AI technologies |

Understanding the AI winter provides insight into the challenges faced by early AI pioneers and the factors contributing to the slow development of neural networks during this period.

Resurgence of Neural Networks

The resurgence of neural networks was fueled by renewed academic interest, increased computational power, and breakthrough applications across various fields. Researchers revisited and enhanced existing models, leading to significant advancements. This period marked a pivotal moment, enabling neural networks to revolutionize areas such as healthcare, finance, and autonomous technology.

Renewed Academic Interest

In the 1980s, academic interest in neural networks experienced a significant revival, spurred by key developments such as the Hopfield Network and Japan's focused initiatives. This resurgence was not ephemeral; it marked a crucial turning point in the academic trajectory of neural networks. John Hopfield's introduction of the Hopfield Network demonstrated the potential of neural networks to tackle complex problems, generating widespread enthusiasm. Concurrently, Japan's ambitious projects, including the Fifth Generation Computer Systems initiative, further energized this revival.

A landmark achievement during this era was the rediscovery and validation of the Backpropagation algorithm. This algorithm was instrumental in enhancing the training of neural networks, making them applicable to real-world scenarios. Its potential was swiftly recognized by the academic community, catalyzing a surge in research and specialized conferences dedicated to neural networks.

Key developments during this period included:

- The establishment of dedicated neural network conferences.

- Increased funding and resources for neural network research.

- Enhanced collaboration between international institutions.

- A significant rise in academic publications on neural networks.

- The Backpropagation algorithm becoming foundational in neural network research.

This chapter of renewed academic interest laid the groundwork for the subsequent advancements and applications in neural networks, marking a pivotal moment in the field's history.

Enhanced Computational Power

The surge in computational power during the late 20th and early 21st centuries revolutionized neural networks, transforming them into practical tools for tackling complex real-world problems. With advanced hardware, tasks that once seemed impossible became achievable, allowing neural networks to more accurately mimic the intricate workings of neurons in the brain.

The rediscovery of the Backpropagation algorithm was a milestone. It enabled efficient training of artificial neurons and facilitated global optimization within neural networks. The foundational McCulloch-Pitts neuron model now had the computational power necessary to drive significant advancements.

Key developments, such as the Hopfield Net and Japan's concentrated efforts on AI, also played essential roles. As computational power increased, so did the scale and complexity of neural networks, leading to more sophisticated architectures and applications.

Consider this emotional timeline:

| Era | Emotion |

|---|---|

| 1980s | Frustration |

| Late 20th Century | Hope |

| Early 21st Century | Excitement |

| Rediscovery of Backpropagation | Inspiration |

| Current | Empowerment |

The period saw a flourishing of conferences and collaborations, reflecting a renewed global interest in neural networks. Enhanced computational power didn't just solve existing problems; it opened up new possibilities, expanding the horizons of what could be achieved.

Breakthrough Applications Emergence

Following the AI winter, neural networks experienced a remarkable resurgence, driven by breakthrough applications that showcased their immense potential. This revival was significantly fueled by the rediscovery of backpropagation in 1986, which greatly enhanced the efficiency and training of neural networks. Early applications, such as noise elimination through ADALINE and MADALINE in 1959, highlighted the practical value of these technologies.

The late 1980s saw the establishment of Neural Networks conferences, which became hubs for sharing innovations and ideas, leading to rapid advancements and new applications.

Key developments from this period include:

- Hopfield Net: A neural network applied effectively in optimization problems.

- Japan's Fifth Generation Computer Systems project: A national initiative aimed at integrating neural networks into advanced computing.

- Backpropagation: A rediscovered technique that significantly improved neural network training efficiency.

- ADALINE and MADALINE: Early implementations that successfully addressed noise elimination, demonstrating the feasibility of neural networks in real-world scenarios.

- Renewed Research and Applications: A surge in creative applications showcased the vast potential of neural networks.

These breakthrough applications marked the emergence of neural networks as a powerful tool in computing, setting the stage for future innovations.

Technological Advancements

Technological advancements in neural networks have profoundly transformed the field, introducing pivotal models and techniques over the decades. In 1959, Bernard Widrow and Marcian Hoff developed the ADALINE (ADAptive LINear NEuron) and MADALINE (Multiple ADAptive LINear NEurons) models, foundational for noise reduction technology. These early advancements set the stage for more sophisticated neural networks.

The origins of artificial neuron models trace back to the 1940s when Warren McCulloch and Walter Pitts created the first model, paving the way for deeper explorations in neural networks. Their work laid the groundwork for future developments, including deep learning and Hebbian networks, which are integral to contemporary applications.

A crucial milestone was the rediscovery of backpropagation in 1986, significantly enhancing the efficiency of neural network training and optimization. This breakthrough improved the performance and accuracy of neural networks, making them more practical for a variety of applications.

Key developments in neural networks can be summarized as follows:

| Year | Development | Innovators |

|---|---|---|

| 1940s | Artificial Neuron Model | McCulloch & Pitts |

| 1959 | ADALINE, MADALINE | Widrow & Hoff |

| 1972 | Analog ADALINE Circuits | Kohonen & Anderson |

| 1975 | Unsupervised Multilayer Network | Various |

| 1986 | Backpropagation | Various |

The establishment of neural network conferences in the late 1980s highlighted the increasing interest and rapid advancements in the field, leading to more sophisticated and complex neural network designs. These conferences provided a platform for researchers to share their findings and collaborate, driving further innovation in neural network technologies.

Conclusion

You've journeyed through the captivating history of neural networks, from McCulloch and Pitts's early conceptual models to the innovative ADALINE and MADALINE systems. Despite numerous challenges and setbacks, these pioneering efforts laid the critical groundwork for today's advanced AI technologies. As technology continues to evolve, neural networks are poised to play an increasingly pivotal role in shaping the future of artificial intelligence. Stay curious and keep exploring—there's always more to discover in this ever-evolving field.